Running Faster by Improving the Accuracy of the Stopwatch: When the Preferred Solution is to Blame the Data for Poor Performance

In August of 2015 (nine months from the time of this writing), I was asked to help improve to process of retiring medical treatment records when service members separate. With clear direction from senior levels of the organization, the urgency of figuring out how to retire the medical treatment records in 45 days or less was palpable.

The problem of late records, at least on the surface, was very solvable. First, the record had to be located. Next, the record was shipped to a scanning facility. Finally, the scanning facility would produce a digital image of the hard-copy file and archive it electronically.

Fourty-five days seemed like plenty of time to accomplish the task. The scanning facility, by contract, had 14 days to complete the scanning and archive functions, so the medical treatment facilities had 31 days to locate and ship the record. Because service members generally begin the separation process months in advance, the medical treatment facilities could actually start the process of locating the records early and ship them to the scanning facility on the day of separation.

At the first stakeholders' meeting that I attended, the project sponsor made it crystal clear in her kickoff message that only one solution would be considered -- we must develop a new enterprise-wide IT system to track separations data and compute performance metrics on the speed with which we retire medical treatment records. The sponsor insisted that meeting the 45-day timeline was currently impossible because data about who was separating from service and when was less than perfect. Sometimes the names on the separation list changed, because service members made last minute decisions. Sometimes the names on the separation list were incomplete, because service members occasionally leave service without warning (e.g., if a death occurs). Sometimes the separation list had extra names, like when a reservist is temporarily assigned to active duty and then returns to reserve duty.

When I pointed out that even if the data were perfectly accurate and instantaneous that over 40% of the records would still be late, the sponsor let me know that I simply did not understand the complexities of the data issue. When I asked questions about how the 'data issue' could cause 5-10% of the records to never be found, the conversation became somewhat heated.

I really didn't understand. How would an expensive enterprise-wide IT system solve the fundamental treament record management issues that appeared to be the root cause of the delays? Why were we waiting until the service member separated to find the treatment record? Why were some records still hard copy when we had a system with the capability to keep digital records?

Besides, a new IT development would take at least two years to design, develop, test, and field. Senior leadership had made it clear that the problem should be solved by the end of the next fiscal year (September 2016).

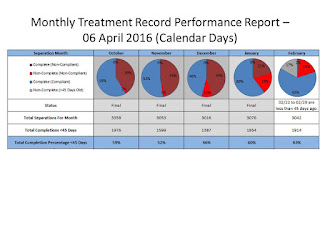

Nine months later, what have we accomplished? The data has improved; we implemented several minor changes to address data accuracy and timeliness. However, the IT solution is still at least a year from being fielded.

Now, we can say with greater accurracy that the treatment records are late and/or lost. We have improved our stopwatch but lost the race.

The irony is that if we had focused on improving the process of managing treatment records that we would have simultaneously improved the quality of the data.

By definition, a better process will generate better data. It does not necessarily work in reverse though --- efforts to improve performance data do not automatically drive a better process.

The problem of late records, at least on the surface, was very solvable. First, the record had to be located. Next, the record was shipped to a scanning facility. Finally, the scanning facility would produce a digital image of the hard-copy file and archive it electronically.

Fourty-five days seemed like plenty of time to accomplish the task. The scanning facility, by contract, had 14 days to complete the scanning and archive functions, so the medical treatment facilities had 31 days to locate and ship the record. Because service members generally begin the separation process months in advance, the medical treatment facilities could actually start the process of locating the records early and ship them to the scanning facility on the day of separation.

At the first stakeholders' meeting that I attended, the project sponsor made it crystal clear in her kickoff message that only one solution would be considered -- we must develop a new enterprise-wide IT system to track separations data and compute performance metrics on the speed with which we retire medical treatment records. The sponsor insisted that meeting the 45-day timeline was currently impossible because data about who was separating from service and when was less than perfect. Sometimes the names on the separation list changed, because service members made last minute decisions. Sometimes the names on the separation list were incomplete, because service members occasionally leave service without warning (e.g., if a death occurs). Sometimes the separation list had extra names, like when a reservist is temporarily assigned to active duty and then returns to reserve duty.

When I pointed out that even if the data were perfectly accurate and instantaneous that over 40% of the records would still be late, the sponsor let me know that I simply did not understand the complexities of the data issue. When I asked questions about how the 'data issue' could cause 5-10% of the records to never be found, the conversation became somewhat heated.

I really didn't understand. How would an expensive enterprise-wide IT system solve the fundamental treament record management issues that appeared to be the root cause of the delays? Why were we waiting until the service member separated to find the treatment record? Why were some records still hard copy when we had a system with the capability to keep digital records?

Besides, a new IT development would take at least two years to design, develop, test, and field. Senior leadership had made it clear that the problem should be solved by the end of the next fiscal year (September 2016).

Nine months later, what have we accomplished? The data has improved; we implemented several minor changes to address data accuracy and timeliness. However, the IT solution is still at least a year from being fielded.

Now, we can say with greater accurracy that the treatment records are late and/or lost. We have improved our stopwatch but lost the race.

The irony is that if we had focused on improving the process of managing treatment records that we would have simultaneously improved the quality of the data.

By definition, a better process will generate better data. It does not necessarily work in reverse though --- efforts to improve performance data do not automatically drive a better process.

Comments

Post a Comment